> My large QP portfolio model does not solve with GAMS/KNITRO.

Yes, for large problems you will get:

Memory estimate for Hessian is too small.

Increase the <modelname>.workfactor option from the current value of 1

For 2000 stocks with WORKFACTOR=10 I got the same error.

Even if the model is formulated as a QCP model, the GAMS link is not smart enough in setting up the hessian efficiently. Instead it looks like the QP is handled as a general NLP.

There are two easy workarounds. First of all you can solve this with IPOPT. The GAMS link for IPOPT is smarter in dealing with second derivatives than the link for KNITRO (as of GAMS 23.5). Note that it is better to solve as a QCP model than as an NLP model: GAMS is not smart enough to recognize this as a QP and exploit that:

| model type | GAMS generation time | IPOPT time | function evaluation time | Total solver time |

| NLP | 38 | 21.8 | 39.1 | 61.0 |

| QCP | 38 | 21.6 | 9.8 | 31.4 |

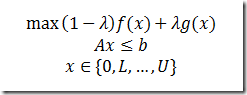

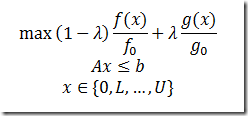

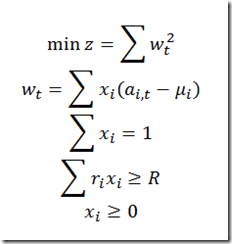

A second possibility is to reformulate model (1):

into model (2):

This formulation can be solved with KNITRO without the problems with the hessian storage.

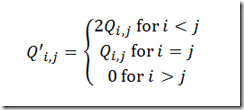

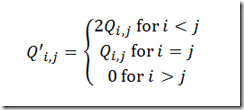

As an aside we can improve formulation 1 by replacing the variance-covariance matrix Q by

We exploit here symmetry in the quadratic form x’Qx. This exercise substantially reduces the GAMS generation time, and also further shaves off some time from the solver:

We exploit here symmetry in the quadratic form x’Qx. This exercise substantially reduces the GAMS generation time, and also further shaves off some time from the solver:

| model type | GAMS generation time | IPOPT time | function evaluation time | Total solver time |

| QCP –triangular Q | 18.8 | 21.3 | 4.6 | 26 |

We can also apply the triangular storage scheme to model (2). With a solver like SNOPT this leads to a very fast total solution time (i.e. generation time + solver time).

| model type | GAMS generation time | SNOPT time |

| NLP –triangular Q in linear constraints | 1 | 5 |

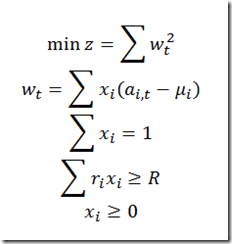

If needed we can even speed this up by not using the covariances but rather the mean adjusted returns directly:

I used T=300 time periods. This formulation yields:

| model type | GAMS generation time | SNOPT time |

| NLP – Mean Adj Returns | 0.5 | 2.3 |

Even for the simple QP portfolio model, careful modeling can help to improve the performance in a significant way.