- On an \(n \times n\) chessboard place as many queens as possible such that none of them is attacked

- Given this, how many different ways can we place those queens?

We use the usual \(8 \times 8\) board.

Rooks

A related, simpler problem is about placing rooks. The optimization problem is very simple: it is an assignment problem:

| n-Rooks Problem |

|---|

| \[\begin{align}\max&\> \color{DarkRed}z=\sum_{i,j} \color{DarkRed} x_{i,j}\\ & \sum_j \color{DarkRed}x_{i,j} \le 1 && \forall i\\& \sum_i \color{DarkRed}x_{i,j} \le 1 && \forall j\\ & \color{DarkRed}x_{i,j}\in\{0,1\} \end{align}\] |

For an \(8 \times 8\) board it is obvious that the number of rooks we can place is 8. Here we show two configurations:

We can easily answer: how many alternative configuration with 8 rooks can we find? This is \[n! = 8! = 40,320\] Basically, we enumerate all row and column permutations of the diagonal depicted above. We can use the solution pool in solvers like Cplex and Gurobi to find all of them. Here I use Cplex and the performance is phenomenal: all 40,320 solutions are found in 16 seconds.

Board

In the models in this write-up, I assume a board that has the coordinates:

|

| Coordinate System in Models |

This also means that the diagonals:

|

| Main diagonal and anti-diagonal |

have the form \(\{(i,j)|i=j\}\) and \(\{(i,j)|i+j=9\}\). More generally, for the downward sloping main diagonals we have the rule: \[i=j+k\] for \(k=-6,\dots,6\). The anti-diagonals can be described as: \[i+j=k\] for \(k=3,\dots,15\). We can illustrate this with:

|

| Diagonals and anti-diagonals |

The first picture has cell values \(a_{i,j}=i-j\) and the second one has cell values \(a_{i,j}=i+j\).

Bishops

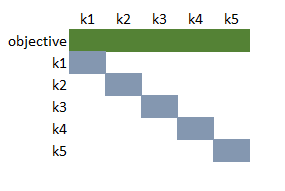

The model for placing bishops is slightly more complicated: we need to check the main diagonals and the anti-diagonals. We use the notation from the previous paragraph. So with this, our model can look like:

| n-Bishops Problem |

|---|

| \[\begin{align}\max& \>\color{DarkRed}z=\sum_{i,j} \color{DarkRed} x_{i,j}\\ & \sum_{i-j=k} \color{DarkRed}x_{i,j} \le 1 && k=-(n-2),\dots,(n-2)\\& \sum_{i+j=k} \color{DarkRed}x_{i,j} \le 1 && k=3,\dots,2n-1\\ & \color{DarkRed}x_{i,j}\in\{0,1\} \end{align}\] |

Note that these constraints translate directly into GAMS:

bishop_main(k1).. sum((i,j)$(v(i)-v(j)=v(k1)),x(i,j)) =l= 1;

bishop_anti(k2).. sum((i,j)$(v(i)+v(j)=v(k2)),x(i,j)) =l= 1;

We can accommodate 14 bishops. There are 256 different solutions (according to Cplex).

Note: the GAMS display of the solution is not so useful:

---- 47 VARIABLE x.L 1 2 4 5 7 8 1 1 1 1 1 2 1 3 1 1 6 1 1 7 1 8 1 1 1 1

For this reason I show the solution using the \(\LaTeX\) chessboard package [5]. You should be aware that the coordinate system is different than what we use in the models.

Queens

The \(n\) queens problem is now very simple: we just need to combine the two previous models.

| n-Queens Problem |

|---|

| \[\begin{align}\max&\> \color{DarkRed}z= \sum_{i,j} \color{DarkRed} x_{i,j}\\ & \sum_j \color{DarkRed}x_{i,j} \le 1 && \forall i\\& \sum_i \color{DarkRed}x_{i,j} \le 1 && \forall j\\ & \sum_{i-j=k} \color{DarkRed}x_{i,j} \le 1 && k=-(n-2),\dots,(n-2)\\& \sum_{i+j=k} \color{DarkRed}x_{i,j} \le 1 && k=3,\dots,2n-1\\ & \color{DarkRed}x_{i,j}\in\{0,1\} \end{align}\] |

A solution can look like:

We can place 8 queens and there are 92 solutions.

Notes:

- Cplex delivered 91 solutions. This seems to be a tolerance issue. I used a solution pool absolute gap of zero (option SolnPoolAGap=0 or as it is called recently: mip.pool.absgap=0) [4]. As the objective jumps by one, it is safe to set the absolute gap to 0.01. With this gap we found all 92 solutions. Is this a bug? Maybe. Possibly. Probably. This 92-th solution is not supposed to be cut off. Obviously, poor scaling is not an issue here: this model is as well-scaled as you can get. I suspect that the (somewhat poorly understood) combination of tolerances (feasibility, integer, optimality) causes Cplex to behave this way. If too many binary variables assume 0.99999 (say within the integer tolerance) we have an objective which is too small. Indeed, we can also get to 92 solutions by setting the integer tolerance epint=0. Note that setting the integer tolerance to zero will often increase solution times.

Often tolerances "help" us: they make it easier to find feasible or optimal solutions. Here is a case when they really cause problems.

Of course this discussion is not a good advertisement for Mixed Integer Programming models. Preferably we should not have to worry about technical details such as tolerances when building and solving models that are otherwise fairly straightforward (no big-M's, well-scaled, small in size). - A two step algorithm can help to prevent the tolerance issue discussed above:

- Solve the maximization problem: we find max number of queens \(z^*\).

- Create a feasibility problem with number of queens equal to \(z^*\). I.e. we add the constraint: \[\sum_{i,j} x_{i,j}=z^*\] This problem has no objective. Find all feasible integer solutions using the solution pool. We don't need to set a solution pool gap for this.

- Many of the 92 solutions are the result of simple symmetric operations, such as rotating the board, or reflection [2]. The number of different solutions after ignoring these symmetries is just 12. The GAMS model in [3] finds those.

- The model in [3] handles the bishop constraints differently, by calculating an offset and writing \[\sum_i x_{i,i +\mathit{sh}_s} \le 1 \>\>\forall s\] I somewhat prefer our approach.

Kings

Kings are handled quite cleverly in [1]. They observe that there cannot be more than one king in each block \[\begin{matrix} x_{i,j} & x_{i,j+1}\\ x_{i+1,j} & x_{i+1,j+1}\end{matrix}\] Note that we only have to look forward (and downward) as a previous block would have covered things to the left (or above). The model can look like:

| n-Kings Problem |

|---|

| \[\begin{align}\max& \>\color{DarkRed}z=\sum_{i,j} \color{DarkRed} x_{i,j}\\ & \color{DarkRed}x_{i,j}+\color{DarkRed}x_{i+1,j}+\color{DarkRed}x_{i,j+1}+\color{DarkRed}x_{i+1,j+1}\le 1 && \forall i,j\le n-1\\ & \color{DarkRed}x_{i,j}\in\{0,1\} \end{align}\] |

Two solutions are:

We can place 16 kings, and there are a lot of possible configurations: 281,571 (says Cplex).

Knights

To place knights we set up a set \(\mathit{jump}_{i,j,i',j'}\) indicating if we can jump from cell \((i,j)\) to cell \((i',j')\). We only need to look forward, so for each \((i,j)\) we need to consider four cases:

| \(j\) | \(j+1\) | \(j+2\) | |

| \(i-2\) | \(x_{i-2,j+1}\) | ||

| \(i-1\) | \(x_{i-1,j+2}\) | ||

| \(i\) | \(x_{i,j}\) | ||

| \(i+1\) | \(x_{i+1,j+2}\) | ||

| \(i+2\) | \(x_{i+2,j+1}\) |

Note that near the border we may have fewer than four cases. In GAMS we can populate the set \(\mathit{jump}\) straightforwardly:

jump(i,j,i-2,j+1) = yes;

jump(i,j,i-1,j+2) = yes;

jump(i,j,i+1,j+2) = yes;

jump(i,j,i+2,j+1) = yes;

The model can look like:

| n-Knights Problem |

|---|

| \[\begin{align}\max& \>\color{DarkRed}z=\sum_{i,j} \color{DarkRed} x_{i,j}\\ & \color{DarkRed}x_{i,j}+\color{DarkRed}x_{i',j'}\le 1 && \forall i,j,i',j'|\mathit{jump}_{i,j,i',j'}\\ & \color{DarkRed}x_{i,j}\in\{0,1\} \end{align}\] |

We can place 32 knights. There are only two different solutions:

Conclusion

The solution pool is a powerful tool to enumerate possibly large numbers of integer solutions. However, with default tolerances, setting the solution pool absolute gap tolerance to zero may cause perfectly good integer solutions to be missed. This is dicey stuff.

References

- L. R. Foulds and D. G. Johnston, An Application of Graph Theory and Integer Programming: Chessboard Non-Attacking Puzzles, Mathematics Magazine Vol. 57, No. 2 (Mar., 1984), pp. 95-104

- Eight queens puzzle, https://en.wikipedia.org/wiki/Eight_queens_puzzle

- Maximum Queens Chess Problem, https://www.gams.com/latest/gamslib_ml/queens.103

- Absolute gap for solution pool, https://www.ibm.com/support/knowledgecenter/en/SSSA5P_12.7.0/ilog.odms.cplex.help/CPLEX/Parameters/topics/SolnPoolAGap.html

- chessboard – Print chess boards, https://ctan.org/pkg/chessboard

- Danna E., Fenelon M., Gu Z., Wunderling R. (2007) Generating Multiple Solutions for Mixed Integer Programming Problems. In: Fischetti M., Williamson D.P. (eds) Integer Programming and Combinatorial Optimization. IPCO 2007. Lecture Notes in Computer Science, vol 4513. Springer, Berlin, Heidelberg