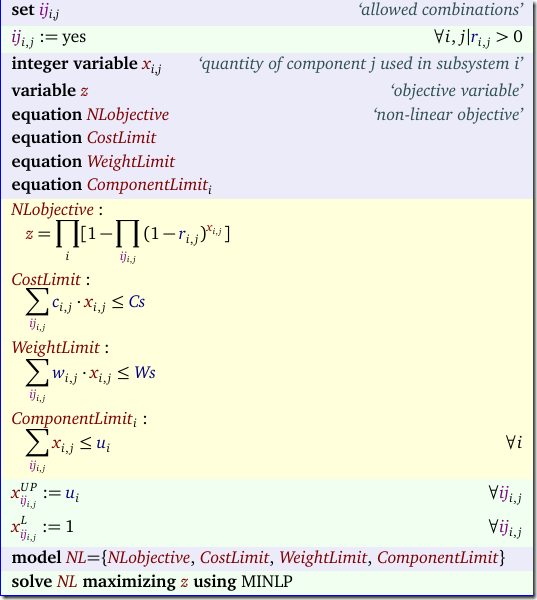

In http://yetanothermathprogrammingconsultant.blogspot.com/2015/11/global-optimality.html a small MINLP model is shown that does not solve that easily. Here is it again:

The model and the data comes from: A Hybrid Parallel Genetic Algorithm for Reliability Optimization. The model is called RRAP for Reliability-Redundancy Allocation Problem. We allow for a mix of redundant components to maximize total system reliability with some weight and cost constraints. For small problems we can use a simple mechanism to linearize the objective: just enumerate the possible configurations for a subsystem i. In this case we have only 4 different components in a subsystem and we can have at most u(i)=6 components in total in each subsystem. That means we can enumerate all possible combinations:

In total: 209 combinations for the most complex sub-systems. Using this enumeration scheme we can use a binary variable to indicate whether we select a certain combination. With this we can form a linear model:

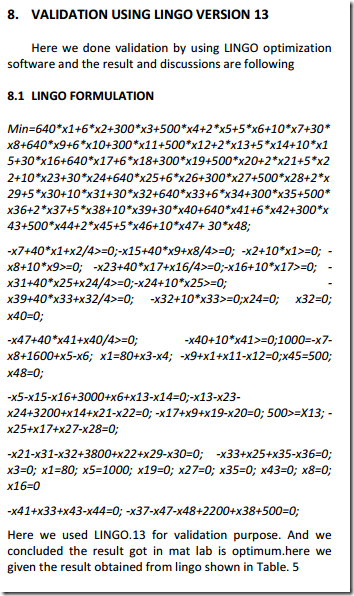

We can drop the ComponentLimit constraint as this is now part of the enumeration process. Notice that our model is simpler than the one stated in the paper which looks somewhat strange here and there, as if they got lost a little bit in the math. E.g. for the cost and weight limits the paper proposes:

I like my version much better.

The objective coefficients coeff(i,k) are calculated as:

Note: the subset ik(i,k) indicates that not all possible combinations (i,k) are allowed: some subsystems i have fewer possible configurations k. Also note that we are maximizing log(z) instead of z. But as this is a monotonic transformation, this will give us the same optimal solution.

As the problem is small, the final MIP is also not too big. It has a simple structure and good MIP solvers solve this very fast. This is a fairly standard way of dealing with these kind of problems, so it should be in our toolbox.