Introduction

Pyomo [1] is a popular Python based modeling tool. In [2] a question is posed about a situation where a certain constraint takes more than 8 hours to generate. As we shall see, the reason is that extra indices are used.

The constraint \[y_i = \sum_j x_{i,j} \>\>\>\forall i,j\] is really malformed. The extra \(\forall j\) is problematic. What does this mean? There are two obvious interpretations:

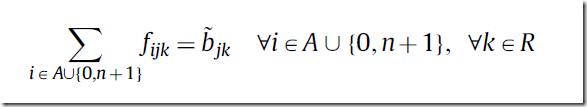

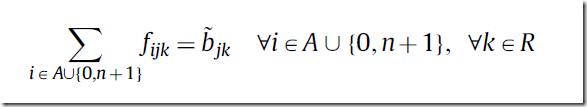

Journal papers are often typeset using LaTeX. Not much checking there, and as a result authors are free to write equations like [3]:

Here we have the same thing. We have a summation over \(i\) as well as a \(\forall i\).

The GAMS fragment corresponding to this example, shows GAMS will object to this construct:

AMPL also gives a syntax error. The message could be improved a bit:

The Pyomo equivalent can look like:

This fragment is a bit more difficult to read, largely due to syntactic clutter. But in any case: Python and Pyomo accepts this constraint as written. To see what is generated, we can use

This will show something like:

We see for each \(i\) we have three duplicate constraints. Instead of 6 constraints, we just should have 2. The way to fix this is to remove the function argument \(j\) from eqRule:

After this, model.Eq.pprint() produces

This looks much better.

The constraint in the original question was:

Using the knowledge of the previous paragraph we know this should really be:

A simple example

The constraint \[y_i = \sum_j x_{i,j} \>\>\>\forall i,j\] is really malformed. The extra \(\forall j\) is problematic. What does this mean? There are two obvious interpretations:

- One could say, this is just wrong. Below we will see that GAMS and AMPL follow this approach.

- We can also interpret this differently. Assume the inner \(j\) is scoped (i.e. local). Then we could read this as: repeat the constraint \(y_i = \sum_j x_{i,j}\), \(n\) times. Here \(n=|J|\) is the cardinality of set \(J\). This is what Pyomo is doing.

LaTeX: no checks

Journal papers are often typeset using LaTeX. Not much checking there, and as a result authors are free to write equations like [3]:

Here we have the same thing. We have a summation over \(i\) as well as a \(\forall i\).

GAMS: syntax error

The GAMS fragment corresponding to this example, shows GAMS will object to this construct:

11 equation e(i,j);

12 e(i,j).. y(i) =e= sum(j, x(i,j));

**** $125

**** 125 Set is under control already

13

**** 1 ERROR(S) 0 WARNING(S)

|

AMPL: syntax error

AMPL also gives a syntax error. The message could be improved a bit:

D:\etc>ampl x.mod

d:x.mod, line 8 (offset 155):

syntax error

context: e{i in I, j in J}: Y[i] = sum{j >>> in <<< J} X[i,j];

|

Pyomo: create duplicate constraints

The Pyomo equivalent can look like:

def eqRule(m,i,j):

return m.Y[i] == sum(m.X[i,j] for j in m.J);

model.Eq =

Constraint(model.I,model.J,rule=eqRule)

|

This fragment is a bit more difficult to read, largely due to syntactic clutter. But in any case: Python and Pyomo accepts this constraint as written. To see what is generated, we can use

model.Eq.pprint()

|

This will show something like:

Eq : Size=6,

Index=Eq_index, Active=True

Key : Lower : Body : Upper

: Active

('i1', 'j1') : 0.0 : Y[i1] - (X[i1,j1] + X[i1,j2] +

X[i1,j3]) : 0.0 : True

('i1', 'j2') : 0.0 : Y[i1] - (X[i1,j1] + X[i1,j2] +

X[i1,j3]) : 0.0 : True

('i1', 'j3') : 0.0 : Y[i1] - (X[i1,j1] + X[i1,j2] +

X[i1,j3]) : 0.0 : True

('i2', 'j1') : 0.0 : Y[i2] - (X[i2,j1] + X[i2,j2] +

X[i2,j3]) : 0.0 : True

('i2', 'j2') : 0.0 : Y[i2] - (X[i2,j1] + X[i2,j2] +

X[i2,j3]) : 0.0 : True

('i2', 'j3') : 0.0 : Y[i2] - (X[i2,j1] + X[i2,j2] +

X[i2,j3]) : 0.0 : True

|

We see for each \(i\) we have three duplicate constraints. Instead of 6 constraints, we just should have 2. The way to fix this is to remove the function argument \(j\) from eqRule:

def eqRule(m,i):

return m.Y[i] == sum(m.X[i,j] for j in m.J);

model.Eq = Constraint(model.I,rule=eqRule)

|

After this, model.Eq.pprint() produces

Eq : Size=2,

Index=I, Active=True

Key : Lower : Body : Upper

: Active

i1 :

0.0 : Y[i1] - (X[i1,j3] + X[i1,j2] + X[i1,j1]) : 0.0 :

True

i2 :

0.0 : Y[i2] - (X[i2,j3] + X[i2,j2] + X[i2,j1]) : 0.0 :

True

|

This looks much better.

The original problem

The constraint in the original question was:

def

period_capacity_dept(m, e, j, t, dp):

return sum(a[e, j, dp, t]*m.y[e,j,t] for

(e,j) in model.EJ)<= K[dp,t] + m.R[t,dp]

model.period_capacity_dept

= Constraint(E, J, T, DP, rule=period_capacity_dept)

|

Using the knowledge of the previous paragraph we know this should really be:

def

period_capacity_dept(m, t, dp):

return sum(a[e, j, dp, t]*m.y[e,j,t] for

(e,j) in model.EJ)<= K[dp,t] + m.R[t,dp]

model.period_capacity_dept

= Constraint(T, DP, rule=period_capacity_dept)

|

Pyomo mixes mathematical notation with programming. I think that is one of the reasons this bug is more difficult to see. In normal programming, adding an argument to a function has an obvious meaning. However in this case, adding e,j means in effect: \(\forall e,j\). If \(e\) and \(j\) belong to large sets, we can easily create a large number of duplicates.

Notes: Debugging Pyomo models

When debugging Pyomo models there are a few tricks to know about:

- Have a small data set available. Debugging with large data sets is very difficult and plain unpleasant.

- Use model.symbol.pprint()

- Create an LP file of the model. When using the pyomo script, use the convert option (don't forget the --symbolic-solver-labels flag). From Python code, use model.write(filename, io_options={'symbolic_solver_labels': True}).

References

- http://www.pyomo.org

- Pyomo: Simple inequality constraint takes unreasonable time to build, https://stackoverflow.com/questions/58034244/pyomo-simple-inequality-constraint-takes-unreasonable-time-to-build

- Checking math, https://yetanothermathprogrammingconsultant.blogspot.com/2012/03/checking-math.html