Regularly I am asked “What is the difference between Matlab and GAMS when modeling optimization problems?”. I’ll try to answer this question with some examples.

Matlab

In Matlab LP and MIP models are modeled using a matrix data structure. Basically a linear optimization model is viewed as:

| \[\boxed{\begin{align}\min\>&c^Tx\\&A_1 x\le b_1\\&A_2 x=b_2\\&\ell\le x \le u\end{align}}\] |

We need to populate the vectors \(c\), \(b_1\), \(b_2\), \(\ell\) and \(u\) and the matrices \(A_1\), \(A_2\) and then call linprog or intlinprog.

Some notes:

- The matrices \(A_1\), \(A_2\) can become very, very large and sparse for larger models. In that case it may help to use a sparse matrix storage scheme, preventing all the zeros being stored. We’ll see an example of this later on in a subsequent post.

- There are alternative modeling frameworks available for use with Matlab, including Tomlab and CVX. These systems take a step toward a somewhat more equation based modeling paradigm. In some sense one could think about them as positioned between pure Matlab and a modeling system like AMPL and GAMS.

- Instead of the Matlab optimization toolbox solvers linprog or intlinprog, you can also use high-performance solvers such as Cplex and Gurobi. They have a similar matrix oriented modeling interface as the Matlab solvers.

- Matlab’s quadratic solver quadprog also has a similar matrix oriented interface.

- General NLP (nonlinear programming) problems have a totally different interface in Matlab. They require the objective and constraints to be modeled as functions. Introducing nonlinearities to an LP model will force you to rewrite the the model code.

- In practice Matlab’s matrix approach works best for highly structured models, and is not as convenient for more complex and messy models.

- Many LP/MIP models in R are using an matrix-based modeling paradigm. Much of what I am saying here also applies to R.

- Indexing in Matlab is done by integers, and array indexing (vectors, matrices) is one-based.

- Matlab default variable bounds are \(-\infty \le x \le \infty\). Most LP and MIP solvers use a different default: if a variable has no bounds it is considered positive.

GAMS

GAMS is an equation based modeling system. This means an optimization model is collection of algebraic equations. For instance a capacity constraint can look like:

Mathematics:

\[\sum_j x_{i,j} \le cap_{i} \>\> \forall i\]

GAMS:

Capacity(i)..

sum(j, x(i,j)) =l= cap(i);

Of course we can write a whole LP as just one or two equations, but that is almost never a good modeling approach:

objective..

z =e= sum(j, c(j)*x(j));

e(i)..

sum(j, a(i,j)*x(j)) =e= b(i);

Notes:

- GAMS is similar to other modeling systems such as AMPL. These notes largely apply to those modeling systems as well.

- Equation based modeling allows an easy transition to non-linear programming. We don’t have to change the modeling paradigm when the model becomes non-linear.

- A properly designed model will is both compact and allows us to think and reason about the model. Often we don’t need to maintain a separate document that states the mathematical model.

- A modeling system helps to be efficient in writing and maintaining complex models.

- GAMS is somewhat unusual in not having an objective function but rather an objective variable. The objective function is formulated as a normal constraint.

- By default GAMS variables are free variables. As said before most other modeling software assume by default that variables are non-negative.

- Indexing in GAMS is done using strings.

A simple LP transportation problem

Let’s start with an extremely simple transportation problem from the GAMS model library (1) and originally from (2) (though with slightly different data, making the model degenerate).

The mathematical model looks like:

| \[\boxed{\begin{align}\min\>&\sum_{i,j} c_{i,j} x_{i,j}\\&\sum_j x_{i,j} \le a_i\>\>\forall i\\&\sum_i x_{i,j} \ge b_j\>\>\forall j\\&x_{i,j}\ge 0\end{align}}\] |

Below is a side-by-side comparison of the Matlab code and the GAMS model. As you will see the Matlab matrix approach is very different from an equation based language.

| Matlab code | GAMS code | |

|---|---|---|

| NumPlants = 2; NumMarkets = 3; | MatLab uses indexing by integers. | |

| GAMS is set based with set elements represented by strings | sets i canning plants /seattle, san-diego / j markets /new-york, chicago, topeka / ; | |

| a = [350 600]'; % capacity b = [325 300 275]'; % demand d = [2.5 1.7 1.8; % distance 2.5 1.8 1.4]; f = 90; % unit freight cost c = f * d / 1000; % freight | Ordering is important. We need to remember index=1 means Seattle or New-York depending on the situation. | |

| Ordering is not important. | parameters a(i) capacity of plant i in cases / seattle 350 san-diego 600 / b(j) demand at market j in cases / new-york 325 chicago 300 topeka 275 / ; table d(i,j) distance in thousands of miles new-york chicago topeka seattle 2.5 1.7 1.8 san-diego 2.5 1.8 1.4 ; scalar f freight in dollars per case per 1000 miles /90/; parameter c(i,j) transport cost in $1000 per case ; c(i,j) = f * d(i,j) / 1000 ; | |

| % allocate matrices Asupply = zeros(NumPlants,NumPlants*NumMarkets); Ademand = zeros(NumMarkets,NumPlants*NumMarkets);

% populate submatrices for i=1:NumPlants for j=1:NumMarkets Asupply(i, k) = 1; Ademand(j, k) = 1; end end

% populate matrix A and vectors B,C A = [Asupply;-Ademand]; B = [a;-b]; C = reshape(c',NumPlants*NumMarkets,1);

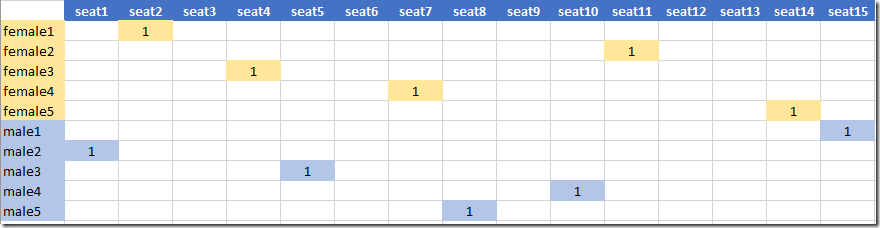

% positive variables lb = zeros(NumPlants*NumMarkets,1); | Lots of index calculations. Note that we flip the ≥ demand constraint to a ≤ constraint. The matrix A looks like: A = | |

| The GAMS code for the equations is much closer to the mathematical model. | variables x(i,j) shipment quantities in cases z total transportation costs in $1000 ; positive variable x ; equations cost define objective function supply(i) observe supply limit at plant i demand(j) satisfy demand at market j ; cost.. z =e= sum((i,j), c(i,j)*x(i,j)) ; supply(i).. sum(j, x(i,j)) =l= a(i) ; demand(j).. sum(i, x(i,j)) =g= b(j) ; | |

| options = optimoptions('linprog','Algorithm','dual-simplex'); [x,z] = linprog(C,A,B,[],[],lb,[],[],options); x=reshape(x,NumMarkets,NumPlants)'; z,x | ||

| model transport /all/ ; | ||

The output of the two models is similar. As the LP model has multiple optimal solutions, we actually find two different solutions.

| Matlab output | GAMS output | |

|---|---|---|

| z = 153.6750 x = 0 300 0 | ||

| ---- 66 VARIABLE z.L = 153.675 total transportation costs in $1000 ---- 66 VARIABLE x.L shipment quantities in cases new-york chicago topeka seattle 50.000 300.000 | ||

This was a very simple model model, about the simplest one can imagine. But even with this simple model we can illustrate the differences between a matrix oriented model system like Matlab and an equation based system like GAMS. Already in the Matlab code, I had to apply some careful matrix indexing tricks. The main reason is we need to map from variables \(x_{i,j}\) to matrix columns (each variable is a column in the coefficient matrices \(A_1\) and \(A_2\)), i.e. we have to map from a matrix to a vector. If we have multiple variables and/or variables that have an irregular shape, things become even more dicey in the Matlab approach.

A comparison of a slightly more complicated MIP model is shown in (4).

References

- http://www.gams.com/modlib/libhtml/trnsport.htm

- G.B.Dantzig, Linear Programming and Extensions, Princeton University Press, Princeton, New Jersey, 1963.

- Robert Fourer, “Modeling languages versus matrix generators for linear programming”, ACM Transactions on Mathematical Software, Volume 9, Issue 2, June 1983, Pages 143-183.

- http://yetanothermathprogrammingconsultant.blogspot.com/2016/10/matlab-vs-gams-integer-programming.html shows a Matlab example of a slightly more complex MIP where Matlabs matrix approach becomes somewhat nightmarish.