I received some questions and feedback due to the post here: http://yetanothermathprogrammingconsultant.blogspot.com/2015/10/piecewise-linear-functions-in-mip-models.html. Here are some “back to basics” notes on modeling piecewise linear functions. I only look at the easy case: one dimensional only, i.e. y=f(x). This is all simple stuff but it may be useful to show all the different cases in a row. (At least I can now simplify answering a lot of emails by referring to this post).

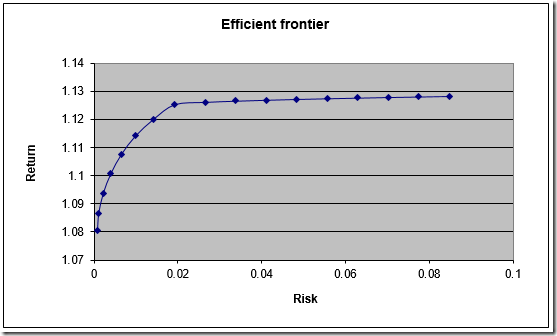

Continuous, bounded convex case

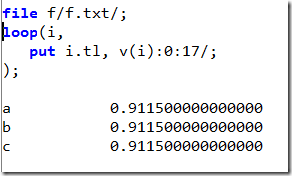

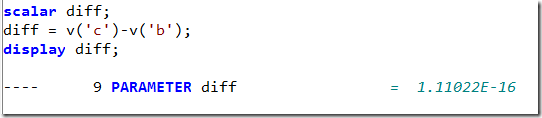

An example is:

The slope and the intercept can be calculated as:

If the model is convex i.e. if we somehow maximize y using the above curve, then we simple can write:

If we are minimizing y then the curve needs to be sloping up. E.g. in electricity power generation models we often see quadratic cost (to be minimized) as function of output. Of course the sign in the BoundY(s) equation need to be flipped to ≥ in such a case. (Here we have also a possible opportunity to formulate the problem as a MIQP – often we see that a piecewise linear model performs better.)

To emphasize: if we can exploit this convexity, then we don’t need any additional discrete variables.

The fact that we want to bound x between 10 and 45 is easily handled by adding lower and upper bounds to x. This case also deals directly with the unbounded case (see below for a further discussion of this), by just not adding a bound on x.

Continuous, bounded non-convex case

Now assume we don’t have convexity (for instance, we are not maximizing y using the above curve; e.g. because y represents cost). This means we need discrete variables.

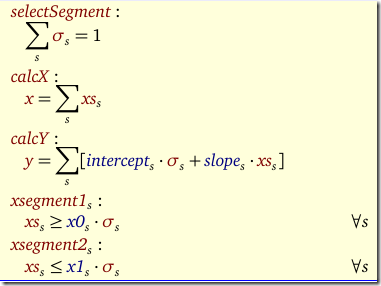

Using binary variables σs and additional continuous variables xss one could write:

Here xss= 0 if x is outside of the selected segment s, and xss= x if x is inside this segment s. The selection of a segment is indicated by σs=1. In the math above, variables are red and data (constants) are displayed in blue. We see quickly we do not multiply variables by variables and thus this is nicely linear.

This is a little improvement over the discussion in http://yetanothermathprogrammingconsultant.blogspot.com/2015/10/piecewise-linear-functions-in-mip-models.html as we simplified the equation CalcY by doing some data manipulation in advance. In general that is a good idea: it moves complexity away from the equations which are the most difficult to debug. I am a big proponent of keeping the equations as simple as possible.

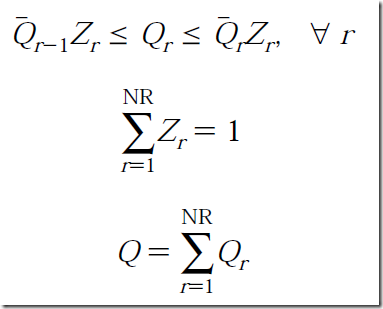

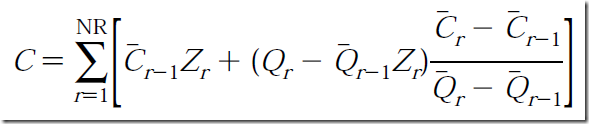

With SOS2 variables λp we can do the following. The data requirements are slightly different. We need a table like:

This will allow us to formulate:

Note that we have called here the equation that sums up the λ’s to one: “convexity”. This is the typical name for this equation (even though we are dealing with a non-convex case).

In the following sections we will assume that σs∈ {0,1} are binary variables and λp are SOS2 variables.

Semi-Continuous, bounded non-convex case

Now assume the data looks like:

This is not so unusual. You get a discount on the (total) price when ordered more than some limit.

The binary variable formulation does not change at all: we can model this directly using the three segments in the table.

The SOS2 variable formulation needs some extra points. Our input for the SOS2 version becomes:

If the curve presents purchasing prices with discounts, this has interesting implications. In this formulation it may be beneficial to order more than you need, just to get the discount. In some models that means modeling some salvage value (can be positive or negative) or even storing it for a future period (adding to inventory).

Typically the solver will not pick a point on a vertical pieces (except for the end points). This is for different reasons. In case we are using the binary variable formulation, it is just not possible to choose those points. In case of the SOS2 formulation, we could end up inside the vertical pieces (not at an end point), but often we minimize or maximize y.

Strange case: discontinuous.

This looks crazy but actually can happen. For instance some types of power generators cannot operate at certain intervals (prohibited zones). A reason could be that amplified vibrations occur at certain operating levels.

The binary variable formulation can deal with this case directly. Unfortunately the SOS2 formulation is not applicable here.

Unbounded case

Now suppose that we don’t have an end point in the last segment (i.e. it just go on forever). Alternatively, we could not have a starting point at the beginning of the first segment. Neither approaches can deal with this case directly. We have to use some possibly large bounds, but in practice that is not a problem. Usually we have some sense of a reasonable lower and upper bound on x.

Simulating SOS2 variables with binary variables

It is possible to mimic the behavior of SOS2 variables using binary variables. To do this we make λp positive variables and introduce some binary variables δp. Actually the last element of δp is not used: it has one element less than λp. The SOS2 structure forces that only two consecutive members of λp can be non-zero, and this condition we can simulate as follows:

Here we introduces a set p1 (subset of p) with one fewer element than p. The twoeach constraint will make sure only two neigbors of λp can be non-zero. The funny syntax is to indicate we do something special for the last equation: the second δp is dropped there. This follows the discussion in H. Paul Williams book “Model Building in Mathematical Programming”:

Logarithmic number of binary variables

Using a formulation from J. P. Vielma and G. L. Nemhauser. Modeling Disjunctive Constraints with a Logarithmic Number of Binary Variables and Constraints. Mathematical programming, 128(1-2):49-72, 2011 we use a logarithmic number of binary variables. The equations look like:

It is needed to use some clever values for L1,L2 (see dissertation by Ernee Kozyre Filho):

and then we end up with something like:

The presence of the expressions 2t gives a hint that this is related to the power-expansion technique: convert a general integer variable into a series of 0-1 variables.

Note that there are other formulations possible. See e.g. Ming-Hua Lin, John Gunnar Carlsson, Dongdong Ge, Jianming Shi, and Jung-Fa Tsai, “A Review of Piecewise Linearization Methods,” Mathematical Problems in Engineering, 2013