From this post we have a problem stated as:

My wife teaches AM and PM kindergarten classes. AM has 14 students and PM 11. At the beginning of each month, she puts out a new seating chart where she rotates students in such a way that they (ideally) sit at a different table and with different students for that month.

There are 3 students per table, but if numbers force the issue, the last may have more or less. We realize that, by the end of the year, there will be some unavoidable situations where students end up sitting with each other or at the same tables again, and this is okay.

Something like this I imagine:

Source

A somewhat related problem is discussed in (1). As usual we have a set of binary variables that indicate the assignment of students to tables:

\[x_{s,t,m} = \begin{cases}1&\text{if student $s$ is seated at table $t$ in month $m$}\\

0&\text{otherwise}\end{cases}\] |

The first set of equations are somewhat straightforward. Each student sits at exactly one table:

| \[\sum_t x_{s,t,m} = 1 \>\>\forall s,m\] |

We cannot exceed the capacity of a table:

| \[\sum_s x_{s,t,m} \le cap_t \>\> \forall t,m\] |

In the complete model below I used an equality constraint for this: there is no slack capacity. Secondly we want to keep track of how many times students sit at the same table. We introduce binary variables \(meet_{s1,s2,t,m}\in\{0,1\}\) indicating if two students \(s1\) and \(s2\) sit at the same table. The obvious nonlinear equation would be:

| \[meet_{s1,s2,t,m} = x_{s1,t,m} \cdot x_{s2,t,m}\>\> \forall s1<s2,t,m\] |

Note that we can exploit symmetry here: if we already compared \(s1,s2\) we do not need to bother about \(s2,s1\). The standard linearization is

\[\begin{align} & meet_{s1,s2,t,m} \le x_{s1,t,m}\\

& meet_{s1,s2,t,m} \le x_{s2,t,m}\\

& meet_{s1,s2,t,m} \ge x_{s1,t,m}+x_{s1,t,m}-1\end{align}\] |

As we push variable \(meet\) down, we can drop the first two inequalities and just keep the last. Note that variable \(meet\) is now a bound instead of the product \(x_{s1,t,m} \cdot x_{s2,t,m}\). When reporting we should use the underlying variables \(x\).

A count of the number of times students \(s1,s2\) sit at the same table is a simple aggregation:

| \[meetcount_{s1,s2} = \sum_{t,m} meet_{s1,s2,t,m}\] |

An obvious objective is to minimize the expression \(\max meetcount_{s1,s2}\). This is easily linearized:

\[\begin{align}\min\>&maxmeetcount\\

&maxmeetcount \ge meetcount_{s1,s2} \>\>\forall s1<s2\end{align}\] |

Model

To test this we use 14 students, 11 months (I assume one month vacation period). The capacities of the 5 tables is 3 except for one table with 2 students (this is to make the capacities sum up to 14).

The equations are exactly the same as discussed above:

To help the solver we fix the table assignment for the first month. In addition I assume student 1 is always sitting at table 1. This makes the model easier to solve. Note that I only fix some variables to 1. As a result a lot of other variables should be zero. E.g. if \(x_{s1,t1,m}=1\) we know that \(x_{s1,t2,m}=0\) and similar for other tables than \(t1\). Instead of fixing all these these myself to zero, I leave it to the MIP presolver to do that for me. This fixing step helps a lot.

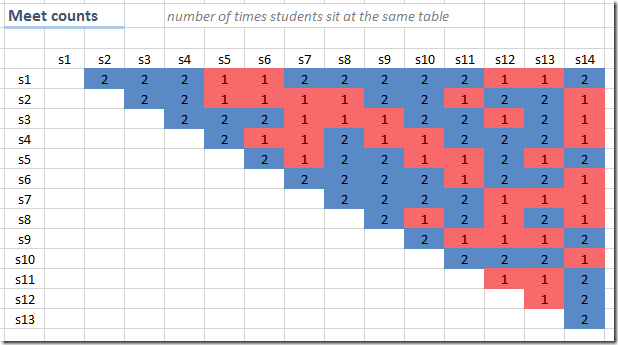

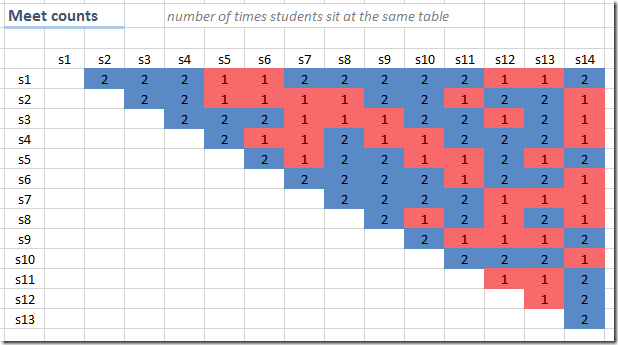

We achieve a quick solution with an objective of 2. I.e. two students will sit at most twice at the same table.

Objective

In the above model we minimized the maximum number of times students sit at the same table, The optimal value is 2. However this means that we allow the number of times two students meet (the meet count, “meets” in the tables below) to float between 0 and 2 without a further incentive to decrease the average meet count within this band width. This leads to a distribution as follows:

On average two students sit at the same table with another student 1.57 times. Detail: note that we need to base the calculations on the optimal values of \(x\) instead of \(meetcount\) as \(meetcount\) is using variable \(meet\) which is just a bound on the product \(x_{s1,t,m} \cdot x_{s2,t,m}\).

We can try to bring the average down by minimizing the sum of the meet counts. The results show, this will not actually bring the average down. But the distribution is certainly different. This objective will produce a few student pairs that have a high meet count:

We can try to minimize the number of student pairs meeting twice or more while still pushing down the maximum by using a composite objective:

| \[\min maxmeetcount + \frac{count2}{100}\] |

where \(count2\) is the number of pairs meeting twice or more. This gives a slightly different distribution:

A depiction of this distribution:

Another interesting objectives:

- Use a quadratic cost \(\sum meetcount_{s1,s2}^2\). This will penalize larger meet counts. This objective is somewhere between minimizing the sum and minimizing the max. MIQPs (Mixed Integer Quadratic Programming problems) are often more difficult to solve however compared to linear models.

- Try to have students meet each other for the second time only after some months, i.e. separate pairs sitting at the same table twice over time. This is not so simple to model. The constraint to enforce say: at least 2 months between before sitting at the same table again is not so difficult (see below), but really maximizing the space in between is not so easy.

When do we meet again?

When we make a picture of when student pairs sit at the same table we get something like this:

We color coded the cells whether we have 0, 1, 2 or 3 or more months in between (the cells with a ‘3’ indicate that meets are separated by 3 or more months). Note we did not display the pairs that only sit at the same table once.

To forbid the cases 0 and 1 in the above solution we can add the constraints:

\[\begin{align}

&meetm_{i1,i2,m} = \sum_t meet_{i1,i2,t,m}\\

&meetm_{i1,i2,m}+meetm_{i1,i2,m+1}+meetm_{i1,i2,m+2} \le 1\end{align} \] |

Here \(meetm\) is a simple aggregation of the variable \(meet\). The second constraint only allows one time the pair \((i1,i2)\) sit at the same table in each three month period.

Note that now we have the variable \(meetm\) we can optimize constraint that calculates the meet count:

| \[meetcount_{s1,s2} = \sum_m meetm_{s1,s2,m}\] |

This will reduce the number of nonzero elements in this equation, so this is a good idea.

The new picture

shows only 2s and 3s (where again 3 means at least 3 months in between). I.e. we successfully removed all cases where student pairs sit at the same table with zero or one month in between.

References

- A more complex table seating problem: http://yetanothermathprogrammingconsultant.blogspot.com/2016/10/scheduling-business-dinners.html

- A related problem: https://en.wikipedia.org/wiki/Kirkman's_schoolgirl_problem