Given a set of numbers with mean μ0 find a subset of at most K numbers that has a mean μ that is as close as possible to μ0.Of course we try immediately to cast this as an optimization problem. The obvious MINLP formulation would be:

There are a number of interesting aspects. Not all of them are completely trivial.

We added a lowerbound of 1 to cnt (the size of the subset). This way we can safely divide by cnt. We could replace the division by a multiplication (this is the usual approach to protect against 'division by zero'), but in this case this is not needed anymore. Also note that a mean or average of zero numbers is not really defined, so this lowerbound really makes sense.

This model contains two non-linearities: (1) the objective is quadratic and (2) we have this division in constraint avg. Note that if we would only consider subsets of exactly size K our problem becomes much simpler. In that case cnt would not be a variable but rather a constant parameter and the avg constraint would become automatically linear.

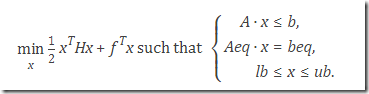

An obvious but interesting question is whether we can linearize this model. The quadratic objective can be approximated by using an absolute value measure. Actually there is some monotic transformation between the quadratic and absolute value criterion. This mans we will reach the same optimal solution using the absolution value method as using the quadratic objective.

The division is more complicated. However we can note that we can rewrite equations count and avg as follows:

This can be linearized as follows:

As usual it is important to find good, tight values for the big-M's. In this case we can find reasonably good values:

where μLO and μUP are bounds on μ, e.g. μLO=min{vi}, μUP=max{vi}.

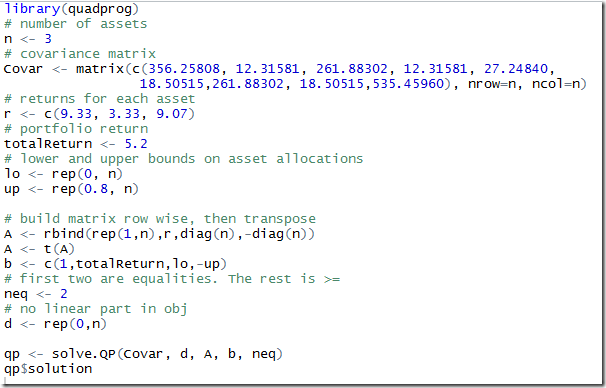

Now lets put everything together:

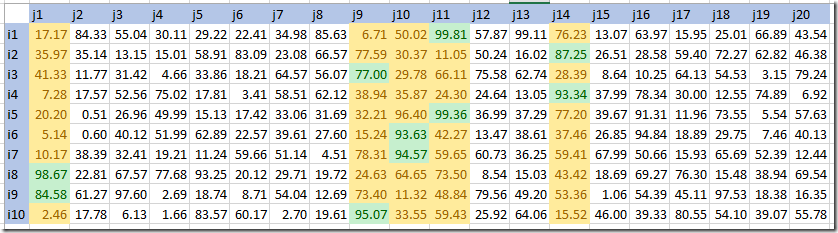

Here are some results:

We see that for K=5 we actually just use 4 numbers.

![image_thumb[1] image_thumb[1]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEg1p6752o1IaBBOPq63dIpc9-vrUJBUygH1NcMkaDmhDWcCVHF_LxhW47c9G2K36LK6Ct5BNPvVxa6zMcviWa1WybASdnjtFA5QDX21kSoqZEf2c0DC3OTZK57IhDuipdE1Z-YxTOJDT7ye/?imgmax=800)

![image_thumb[3] image_thumb[3]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEjmhNiPKPBhrzLWZbh-nrdbAanr9i1dsvG15sQqJ7COfcxuqnsWcpxuSXuda_JUekT29O33gqJ29BU-p_Y-mJGoFl5esTa7GtQrIGUSgv6rcg5wySukSQ894ehkdQ1QF02Rh7GMZnz0lgfg/?imgmax=800)

![image_thumb[5] image_thumb[5]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEiy1IVPybn51JhviLZFbZGBntrsxt7V9Ir9qHETW0frOKaHyP1fSyG5okLuRkzH6zbN4UTqvGcbvHYRas_tUNDy1TWez_D1FyWIfFcpUx92ne32T_gkcKSZj8cW8tCyy6UhfEOOpUCKap1o/?imgmax=800)