Facility location models [1] are one of the most studied areas in optimization. It is still interesting to build a model from scratch.

The question is [2]:

Given \(m\) customer locations, find the minimum number of warehouses \(n\) we need such that each customer is within \(\mathit{MaxDist}\) miles from a warehouse.

Model 1 : minimize number of warehouses

The idea I use here, is that we assign a customer to a warehouse and make sure the distance constraint is met. In the following let \(i\) be the customers, \(j\) be the (candidate) warehouses. We can define the binary variable:\[\mathit{assign}_{i,j} = \begin{cases}1 & \text{if customer $i$ is serviced by warehouse $j$}\\0 &\text{otherwise}\end{cases}\]

A high-level distance constraint is:

\[\begin{align} &\mathit{assign}_{i,j}=1 \Rightarrow \mathit{Distance}(i,j) \le \mathit{MaxDist}&&\forall i,j \\ &\sum_j \mathit{assign}_{i,j} =1 && \forall i\\&\mathit{assign}_{i,j} \in \{0,1\} \end{align}\]

The distance is the usual:

\[ \mathit{Distance}(i,j) = \sqrt{ \left( \mathit{dloc}_{i,x} - \mathit{floc}_{j,x} \right)^2 + \left( \mathit{dloc}_{i,y} - \mathit{floc}_{j,y} \right)^2 } \]

Here \(\mathit{dloc}, \mathit{floc}\) are the \((x,y)\) coordinates of the demand (customer) and facility (warehouse) locations. The \(\mathit{dloc}\) quantities are constants, opposed to \(\mathit{floc}\) which are decision variables: these are calculated by the solver.

This distance function is nonlinear. We can make it more suitable to be handled by quadratic solvers by reformulating the distance constraint as:

\[\left( \mathit{dloc}_{i,x} - \mathit{floc}_{j,x} \right)^2 + \left( \mathit{dloc}_{i,y} - \mathit{floc}_{j,y} \right)^2 \le \mathit{MaxDist}^2 + M (1-\mathit{assign}_{i,j})\]

where \(M\) is a large enough constant.

The next step is to introduce a binary variable: \[\mathit{isopen}_{j} = \begin{cases}1 & \text{if warehouse $j$ is open for business}\\0 &\text{otherwise}\end{cases}\] We need \(\mathit{isopen}_j=0 \Rightarrow \mathit{assign}_{i,j} =0\). This can be modeled as: \[\mathit{assign}_{i,j}\le \mathit{isopen}_j\] The objective is to minimize the number of open warehouses:\[\min n = \sum_j \mathit{isopen}_j\]

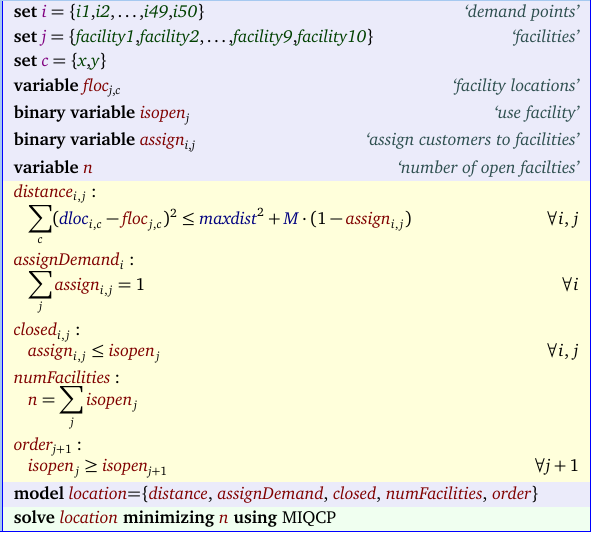

A complete model can look like:

The problem is a convex MIQCP: Mixed Integer Quadratically Constrained Problem. This model type is supported by solvers like Cplex and Gurobi. The last constraint

order is designed to make sure the open facilities are the first in the list of candidate facilities. It is also a way to reduce symmetry in the model.

To test this, I generated 50 random points with \(x,y\) coordinates between 0 and 100. The maximum distance is \(\mathit{MaxDist}=40\). The optimal solution is:

---- 60 VARIABLE n.L = 3.000 number of open facilties

---- 60 VARIABLE isopen.L use facility

facility1 1.000, facility2 1.000, facility3 1.000

---- 63 PARAMETER locations

x y

facility1 43.722 25.495

facility2 31.305 59.002

facility3 67.786 51.790

It is noted that

the only relevant result in the solution is \(n=3\) (the number of open facilities). The chosen location for the warehouses and the assignment is not of much value. We can see this from a picture of the solution, in particular the variables

floc and

assign:

|

| Misinterpretation of the solution |

Model 2: Optimal location of warehouses

We can solve a second model that given \(n=3\) warehouses obtains a solution that:

- assigns customers to their closest warehouse

- finds warehouse locations such that the distances are minimized

If we minimize the sum of the distances:

\[\min \sum_{i,j} \left[ \mathit{assign}_{i,j}\cdot \sqrt{\sum_c \left( \mathit{dloc}_{i,c} - \mathit{floc}_{j,c} \right)^2 }\right]\]

we achieve both goals in one swoop. Of course this is a nasty nonlinear function. We can make this a little bit more amenable to being solved with standard solvers:

\[\bbox[lightcyan,10px,border:3px solid darkblue]{\begin{align}\min &\sum_{i,j} d_{i,j}\\ &d_{i,j} \ge \sum_c \left( \mathit{dloc}_{i,c} - \mathit{floc}_{j,c} \right)^2 - M (1-\mathit{assign}_{i,j})&&\forall i,j\\&\sum_j \mathit{assign}_{i,j} =1 && \forall i\\&\mathit{assign}_{i,j} \in \{0,1\}\\&0\le d_{i,j}\le\mathit{MaxDist}^2 \end{align}}\] Note that now \(j = 1,\dots,n=3\). This is again a convex MIQCP model.

To be a little bit more precise: with this model we minimize the sum of the squared distances which is slightly different. The objective that models the true distances would look like \[\min\sum_{i,j} \sqrt{d_{i,j}}\] This would make the model no longer quadratic, so we would need to use a general purpose MINLP solver (or use some piecewise linear formulation). With the linear objective \(\min \sum_{i,j} d_{i,j}\) we place a somewhat higher weight on large distances. This is a different situation from the first model. There we also used the trick to minimize squared distances, but in that case it did not change the meaning of the model: it gives the same optimal solution. In the case of this second model the summation of squared distances will give slightly different results from actually minimizing the sum of distances.

When we solve this model we see a different location for the warehouses:

---- 78 PARAMETER locations

x y

facility1 24.869 18.511

facility2 72.548 46.855

facility3 24.521 74.617

The plot looks much better now:

|

| Optimal Warehouse Locations with Minimized Distances |

There are of course many other ways to attack this problem:

- Instead of using two models we can combine them. We can form a multi-objective formulation \(\min w_1 n + w_2 \sum_{i,j} d_{i,j}\) Use a large weight \(w_1\) to put priority on the first objective.

- Instead of minimizing the sum of the distances we can choose to minimize the largest distance.

- Instead of Euclidean distances we can use a Manhattan distance metric. This will give us a linear MIP model.

References