Given \(n\) circles with radius \(r_i\) and value \(v_i\) try to fill a rectangle of size \(W\times H\) with a subset of the circles (such that they don't overlap) and that maximizes the total value of the "payload".The data looks like:

---- 28 PARAMETER v value i1 4.237, i2 4.163, i3 2.183, i4 2.351, i5 6.302, i6 8.478, i7 3.077, i8 6.992 i9 7.983, i10 3.733, i11 1.994, i12 5.521, i13 2.442, i14 8.852, i15 3.386, i16 3.572 i17 6.346, i18 7.504, i19 6.654, i20 5.174 ---- 28 PARAMETER r radius i1 1.273, i2 4.295, i3 2.977, i4 1.855, i5 1.815, i6 1.508, i7 2.074, i8 4.353 i9 0.802, i10 2.751, i11 4.992, i12 3.104, i13 4.960, i14 3.930, i15 1.088, i16 3.379 i17 1.218, i18 1.625, i19 3.510, i20 2.459 ---- 28 PARAMETER a area i1 5.090, i2 57.945, i3 27.837, i4 10.812, i5 10.349, i6 7.146, i7 13.517, i8 59.535 i9 2.021, i10 23.775, i11 78.274, i12 30.275, i13 77.291, i14 48.525, i15 3.720, i16 35.864 i17 4.659, i18 8.299, i19 38.709, i20 18.998 ---- 28 PARAMETER W = 15.000 PARAMETER H = 10.000

The area was computed with the familiar formula: \(a_i = \pi r_i^2\). The mathematical model can look like:\[\bbox[lightcyan,10px,border:3px solid darkblue]{\begin{align}\max\>&\sum_i v_i \delta_i\\ & (x_i-x_j)^2 + (y_i-y_j)^2 \ge (r_i+r_j)^2 \delta_i \delta_j & \forall i\lt j \\ & r_i \le x_i \le W - r_i\\ & r_i \le y_i \le H - r_i\\ &\delta_i \in \{0,1\}\end{align}}\] Here the binary variable \(\delta_i\) indicates whether we select circle \(i\) for inclusion in the container. The variables \(x_i\) and \(y_i\) determine the location of the selected circles.

This turns out to be a small but difficult problem to solve. The packing problem is a difficult non-convex problem in its own right and we added a knapsack problem on top of it.

In [1] we found the following solutions:

| Solver | Objective |

|---|---|

| Local solver (BONMIN) | 58.57 |

| Improved by global solver (LINDOGLOBAL) | 60.36 |

Combinatorial Benders Decomposition

In the comments of [1] a variant of Benders Decomposition called Combinatorial Benders Decomposition is suggested by Rob Pratt. Let's see if we can reproduce his results. I often find it useful to do such an exercise to fully understand and appreciate a proposed solution.

First, lets establish that the total number of solutions in terms of the binary knapsack variables \(\delta_i\) is: \(2^n\). For \(n=20\) we have: \[2^{20} = 1,048,576\] This is small for a MIP. In our case unfortunately we have this non-convex packing sub-problem to worry about.

One constraint that can be added is related to the area: the total area of the selected circles should be less than the total area of the container: \[\sum_i a_i \delta_i \le A\] where \(A=W \times H\). This constraint is not very useful to add to our MIQCP directly, but it will be used in the Benders approach. The number of solutions for \(\delta_i\) that are feasible w.r.t. the area constraint is only 60,145. The number of these solutions with a value larger than 60.3 is just 83.

To summarize:

| Solutions | Count |

|---|---|

| Possible configurations for \(\delta_i\) | 1,048,576 |

| Of which feasible w.r.t area constraint | 60,145 |

| Of which have a value better than 60.3 | 83 |

Now we have a number that is feasible to enumerate. We know the optimal objective value should be in this set of 83 problem that are better than 60.3. So a simple approach is just to enumerate solutions starting with the best.

We can give this scheme a more impressive name: Combinatorial Benders Decomposition[2]. The approach can be depicted as:

We add a cut in each iteration to the master. This cut \[\sum_{i\in S} (1-\delta_i)\ge 1\] where \(S = \{i|\delta_i=1\}\), will cut off exactly one solution. In theory it can cut off more solutions, but because of the order we generate proposals, just one solution is excluded each cycle.

The sub model is used only to see if the proposal is feasible w.r.t. to our rectangle. The first sub problem that is feasible gives us an optimal solution to the whole problem. I implement the sub problem as a multistart NLP problem [3]. A slight rewrite of the sub problem gives a proper optimization problem:\[\begin{align} \max \>& \phi\\& (x_i-x_j)^2 + (y_i-y_j)^2 \ge \phi (r_i+r_j)^2&i\lt j\end{align}\] If the optimal value \(\phi^* \ge 1\) we are feasible w.r.t. to the original problem.

Of course, we could as well generate all possible configurations \(\delta_i\) that are feasible w.r.t. to the area constraint (there are 60,145 of them), order them by value (largest value first) and run through. Stop as soon as we find a feasible solution (i.e. where the packing NLP is feasible). No fancy name for this algorithm: "sorting" does not sound very sophisticated.

We can find a better solution with value 60.6134 using this scheme. Of course it is not easy. We need to solve 69 rather nasty non-convex NLPs to reach this solution.

|

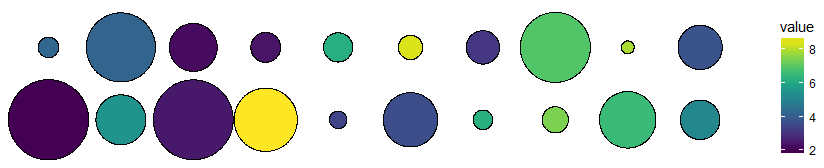

| Best Solution Found |

References

- Knapsack + packing: a difficult MIQCP, http://yetanothermathprogrammingconsultant.blogspot.com/2018/05/knapsack-packing-difficult-miqcp.html

- Codato G., Fischetti M. (2004) Combinatorial Benders’ Cuts. In: Bienstock D., Nemhauser G. (eds) Integer Programming and Combinatorial Optimization. IPCO 2004. Lecture Notes in Computer Science, vol 3064. Springer, Berlin, Heidelberg

- Multi-start Non-linear Programming: a poor man's global optimizer, http://yetanothermathprogrammingconsultant.blogspot.com/2018/05/multi-start-non-linear-programming-poor.html