The performance of real-world, large-scale models can be relatively low with Python approaches. I suspect this is because this is essentially a “scalar” based approach behind a high-level syntax: each individual constraint and variable leads to objects being created.

From: https://software.sandia.gov/trac/coopr/raw-attachment/wiki/Pyomo/pyomo-jnl.pdf :

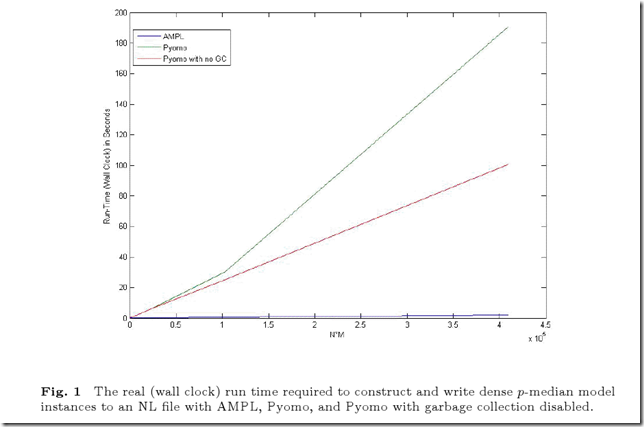

- up to 62 times slower (32 times when garbage collection is disabled) than AMPL on a large, dense p-median problem

- 6 times slower than GAMS on a complex production/ transportation model

I don’t think this performance hit is mainly a result of Python vs. C/C++, but rather a result of the abstraction level of the modeling framework. It would need to be lifted to blocks of variables, equations and parameters instead of individual variables and equations. I suspect that a “proper” implementation of such a modeling framework (which is more complex than what is done here) would be able to achieve AMPL- and GAMS-like performance