I believe any book on linear programming will mention LAD (Least Absolute Deviation) or \(\ell_1\) regression: minimize the sum of the absolute values of the residuals.

\[\begin{align}\min_{\beta}\>&\sum_i |r_i|\\ |

Here \(\beta\) are coefficients to estimate. So they are the decision variables in the optimization model. \(X,y\) are data (watch out here: in many optimization models we denote decision variables by \(x\); here they are constants). \(r\) are the residuals; it is an auxiliary variable that can be substituted out.

The standard LP models suggested for this problem are not very complicated, but still interesting enough to have them cataloged.

There are at least three common LP formulations for this: variable splitting, bounding and a dual formulation. Here is a summary:

- Variable splitting:\[\begin{align}\min\>&\sum_i r^+_i + r^-_i\\&r^+_i - r^-_i =y_i –\sum_j X_{i,j}\beta_j\\&r^+_i, r^-_i\ge 0\end{align}\]In this model, automatically one of the pair \((r^+_i,r^-_i)\) will be zero. We don’t have to add an explicit complementarity condition \(r^+_i \cdot r^-_i = 0\). This is fortunate: we can keep the model linear.

- Bounding:\[\begin{align}\min\>&\sum_i r_i\\&-r_i \le y_i –\sum_j X_{i,j}\beta_j \le r_i\end{align}\]Here \(r_i\) can be left free or you can make it a non-negative variable. It will be non-negative automatically. Note that there are actually two constraints here: \(-r_i \le y_i –\sum_j X_{i,j}\beta_j\) and \(y_i –\sum_j X_{i,j}\beta_j\le r_i\). This formulation is mentioned in [1].

- Sparse bounding:

In the standard bounding formulation we have all the data \(X_{i,j}\) twice in the model, leading to a large number of non-zero elements in the LP matrix. We can alleviate this by introducing an extra free variable \(d\): \[\begin{align}\min\>&\sum_i r_i\\&-r_i \le d_i \le r_i\\&d_i = y_i –\sum_j X_{i,j}\beta_j\end{align}\] Effectively we reduced the number of non-zeros by a factor of two compared to the standard bounding formulation. Note that the first constraint \(-r_i\le d_i \le r_i\) is actually two constraints: \(-r_i\le d_i\) and \(d_i\le r_i\). The sparse version will have fewer non-zero elements, but many more constraints and variables. Advanced LP solvers are based on sparse matrix technology and the effect of more nonzeros is often underestimated by novice users of LP software. The same arguments and reformulations can be used in \(\ell_1\) portfolio models [3]. - Dual:\[\begin{align}\max\>&\sum_i y_i d_i\\&\sum_i X_{i,j} d_i=0 \perp \beta_j \\&-1\le d_i \le 1\end{align}\] The optimal values for \(\beta\) can be recovered from the duals for the constraint \(X^Td=0\) (this is what the notation \(\perp\) means).

One could make the argument the last formulation is the best: it has fewer variables than variable splitting, and fewer equations than the bounding approach. In addition, as mentioned before, the standard bounding formulation has the data twice in the model, resulting in a model with many nonzero elements.

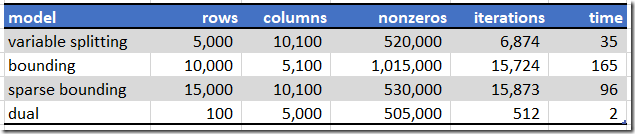

Modern LP solvers are not very well suited for these type of models. They like very sparse LP models, while these models are very dense. Let’s try anyway with a large, artificial data set with \(m=5,000\) cases, and \(n=100\) coefficients. The data matrix \(X\) has 500,000 elements. Some timings with Cplex (default LP method) yield the following results:

Times are in seconds.

The dual formulation seems indeed quite fast. It is interesting that the bounding model (this formulation is used a lot) is actually the slowest. Note that these results were obtained using default settings. This effectively means that Cplex selected the dual simplex solver for all these instances. These timings will change when the primal simplex method or the barrier algorithm is used.

L1fit

The R package L1pack [5] contains a dense, simple (compared to say Cplex), specialized version of the Simplex method based on an algorithm from [6,7]. It is actually quite fast on the above data:

The number of Simplex iterations is 561. This confirms that sparse, general purpose LP solvers have a big disadvantage on this problem (usually Cplex would beat any LP algorithm you can come up yourself, by a large margin).

See the comment from Robert Fourer for some notes on the Barrodale-Roberts algorithm. So I think I need to refine my previous statement a bit: there are two reasons why l1fit is doing so well: (1) dense data using a dense code and (2) a specialized Simplex method handling the absolute values. The number of iterations is close to our dual formulation (which is also very compact), so the time per iteration is about the same as Cplex.

Historical note

The concept of minimizing the sum of absolute deviations goes back to [4].

The term quaesita refers to “unknown quantities”.

F.Y. Edgeworth, 1845-1926.

I noticed that Edgeworth was from Edgeworthstown, Ireland (population: 2,335 in 2016).

References

- Least absolute deviations, https://en.wikipedia.org/wiki/Least_absolute_deviations

- A.Giloni, M.Padberg, Alternative Methods of Linear Regression, Mathematical and Computer Modeling 35 (2002), pp.361-374.

- L1 portfolio formulation, http://yetanothermathprogrammingconsultant.blogspot.com/2010/04/l1-portfolio-formulation.html

- Francis Ysidro Edgeworth, On observations relating to several quantities, Hermathena 6 (1887), pp. 279-285

- Osorio, F., and Wołodźko, T. (2017). L1pack: Routines for L1 estimation, https://cran.r-project.org/web/packages/L1pack/index.html

- Barrodale, I., and Roberts, F.D.K. (1973). An improved algorithm for discrete L1 linear approximations. SIAM Journal of Numerical Analysis 10, 839-848

- Barrodale, I., and Roberts, F.D.K. (1974). Solution of an overdetermined system of equations in the L1 norm. Communications of the ACM 17, 319-320.

No comments:

Post a Comment