When exporting some large solution sets from GAMS I was wondering about the size improvements we can get by storing strings in a pool.

The output data is organized in a very large “cube”, i.e. a single large parameter (in GAMS language) or table (when looked at from an RDBMS). In the GAMS GDX viewer the parameter results(impactparameter, scenario, commodity, region, productiontype, year) looks like:

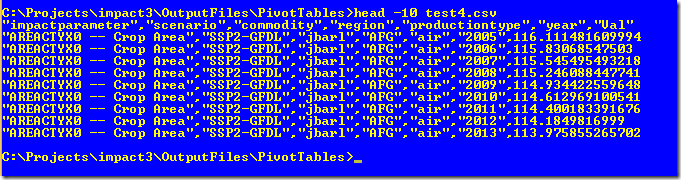

Note that some of the strings are fairly large (the first index), while most are coded efficiently (e.g. three letter country codes). When we export this to a csv file or a database typically we export this as a single large table:

If we look at the table below we are a little bit surprised about the file sizes:

This is a small test case: we can expect larger file sizes in practice (more scenarios, more impact parameters, more production technologies). The SQLite databases were exported with GDX2SQLITE. The compressed GDX files were generated with the environment variable GdxCompress turned on (see http://yetanothermathprogrammingconsultant.blogspot.com/2010/04/gamsgdx-compression.html).

One reason why GAMS GDX files may be more efficient is that strings (corresponding to set elements in GAMS) are stored in a separate data structure. This pool stores only Unique ELements (or UELs). Of course we can also export the data in this form:

- Export the UELs into a separate table (called UELS$).

- Export the parameters with integers in the index positions (these numbers correspond to the UEL$ table). For the example above we store this in a table results$.

- To make it easy for the user we create an SQL VIEW called results that performs the joins to get the string data. You still can do select * from results and get the same results. This database schema is actually significantly more efficient w.r.t. space. The real data is stored in the the uels$ and results$ table. The view does not store any data: the joins are performed when we do a select.

It is interesting to see how few UELs typical models have. This big parameter results has more than 4 million records but it has only 339 unique elements:

or differently derived:

I.e. the UELS$ table will be quite small. A really smart query optimizer may build a hash table out of this for quick lookup (I have seen SQL Server doing these kind of tricks).

The SQL VIEW itself – performing the joins – is somewhat ugly, but as it is automatically generated we don’t really care:

The user can just use results as it were a normal table and does not need to worry about what is happening underneath.

This organization is a little bit like the star schema used in data warehousing and OLAP. It is a bit special as for each dimension we use the same dimension table. If we have explicit sets for each dimension we really could implement a star schema. Again with a proper SQL VIEW we can hide the ugly details from the user.

Of course the GDX files themselves are even more compact (by a large margin!). I am pushing for an ODBC driver for GDX files: that would give us direct access to GDX files as if they were SQL tables. This is both useful for end-users with tools that can use ODBC data sources (e.g. Excel, Tableau, SQL Server, Access) and for programmers who are more familiar with relational data compared to using the GDX API directly (you really need to know GAMS before you can use the GDX API).

No comments:

Post a Comment