I received some additional questions on solving some very large network models. Basically we used the formulation presented here: http://yetanothermathprogrammingconsultant.blogspot.com/2011/06/network-formulation.html. A slightly more optimized version is shown below:

| $ontext |

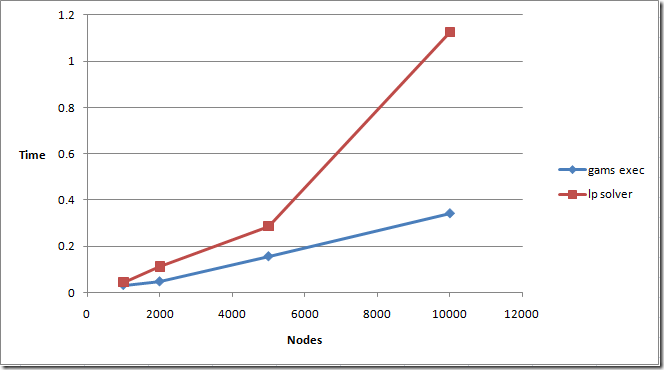

The performance of GAMS looks very good. GAMS execution time scales slightly better than the LP solver used to solve the problem:

GAMS almost scales linearly with the number of nonzero elements to be generated. In this case that means linearly with the number of nodes (because the number of nonzeroes is equal to 10*nodes in these models).

The main difference with the earlier model is that we replaced:

x.up(i,j) = capacity(i,j);

by

x.up(arcs) = capacity(arcs);

This actually saves some time and memory. In the first case we set many x.up’s to zero even though they are not used in the model. These variables with non-default bounds will need to be created and use up memory. In the second case, we only touch variables that are really used.

This small change makes a lot of difference for larger models. For n=5k we see GAMS execution time decrease from 3.775 seconds to 0.156 seconds. If things get large one needs to pay attention to detail!

AMPL Version

A direct translation to AMPL can look like:

| set nodes; set arcs within (nodes cross nodes) := {i in nodes, j in nodes: capacity[i,j]}; # version 1 : slow calc of arcs param rhs{i in nodes} := if i in source then -1 else if i in sink then +1; var x {(i,j) in arcs} >= 0, <= capacity[i,j]; maximize obj:f; subject to flowbal{i in nodes}: |

This formulation is not handled very efficiently by AMPL: the timings for n=5k are

| ##genmod times: |

Especially the calculation of the set arcs is expensive.

Note that if we use an alternative for arcs:

set arcs := {i in nodes, j in nodes: capacity[i,j]};

the burden is moved to x:

| ##genmod times: |

When we run a few different instances we see we do something causing non-linear scaling:

It is clear this is not a good approach. We need to do something about this set arcs. In AMPL we don’t really need to calculate this set, we can directly populate this from the data by replacing in the data file:

| param: capacity := |

by

| param: arcs : capacity := |

Now we get the set arcs basically for free, and we get linear scaling:

The total AMPL generation time vs. LP solution time is here:

This is very close to the GAMS timings given that this was on a different machine.

GLPK

The open source tool GLPK can handle many AMPL linear models. However, in some cases it is substantial slower. This model demonstrates this behavior. We run the model as:

| C:\projects\tmp>timeit \Projects\glpk\glpk\bin\glpsol.exe -m network.mod -d network1000.dat Version Number: Windows NT 6.0 (Build 6002) C:\projects\tmp> |

Besides being much slower than AMPL, the performance of the model generation phase is showing more than linear scaling:

Model generation is here calculated as total elapsed time minus time used for the LP solver.

My conclusions:

- AMPL and GAMS are capable of generating simple network models faster than a good LP solver can solve them

- But a simple mistake and performance goes down the drain

- Direct translations between GAMS and AMPL models are not always a good idea.

No comments:

Post a Comment