| In this post I described a simple way to export a large GAMS data set to a CSV file which can be used as an external data source by Pivot Tables. Basically this was needed as Excel has a limit of a million rows, so we cannot store the data in Excel itself as a table. However newer versions of Excel have a facility called PowerPivot that allows us to store a very large data (or even multiple tables) in what is called a “data model”. This is an internal memory based database (“in-memory analytics engine”), and it does not have a strict row limit. Subsequently, we can create a Pivot Table based on this data. Here is how to set this up interactively. |

Recipe

- Write the GAMS data to a GDX file. The easiest is to create a single large multi-dimensional “Cube” parameter.

- Call GDXDUMP to create a CSV file.

- In Excel 2016 use New Query in the Data tab to import the CSV file.

- In the Query Editor select “Close & Load To".

- In the dialog box unselect “Table” and select “Add this data to the Data Model”.

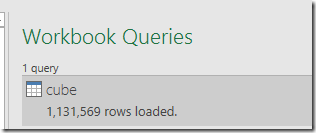

- After pressing the Load button you will see something like:

We are just exceeding the Excel row limit, but no sweat here. - In the Insert tab, select PivotTable. Use the Data Model as data source.

- After OK we have our pivot table:

Limits

The limits are discussed here.

The .xlsx file containing the data can become large. After reading a the above cube.csv file (63 MB) the resulting .xlsx file is only 3 MB. So the compression may actually be doing very well.

The row limit of a table in the data model seems to be: 1,999,999,997, well beyond the row limit of Excel sheets.

Notes:

- PowerPivot/PowerQuery is fairly new. I encountered some crashes of Excel when using more advanced features.

- New features are continuously added. See: https://blogs.office.com/2016/06/21/june-2016-updates-for-get-transform-in-excel-2016-and-the-power-query-add-in/.

- Not sure how the compatibility works out. Can I send this spreadsheet to anyone with Excel 2016? (Or 2013?)

- I tried quickly to move these steps into a VB script. That did not really work. The generated VBA code by the macro recorder is somewhat ugly for these Power Query calls. May be I should try again but then pay more attention to the details.

- The pivot table seems more responsive than with the external data source. Having all in-memory helps.

- Advantage of having the data embedded: .xslx file is self-contained. With external data source one needs to ship several files.

- With the option Manage Data Model in the Data tab we can look at the tables stored in the data model and perform operations. This is not an Excel table and the syntax for formulas is different (using a language called DAX – Data Analysis Expressions). See here.

No comments:

Post a Comment